Generative Sample Removal in Continual Learning (Published in CVPR 2025 Workshop SynData4CV)

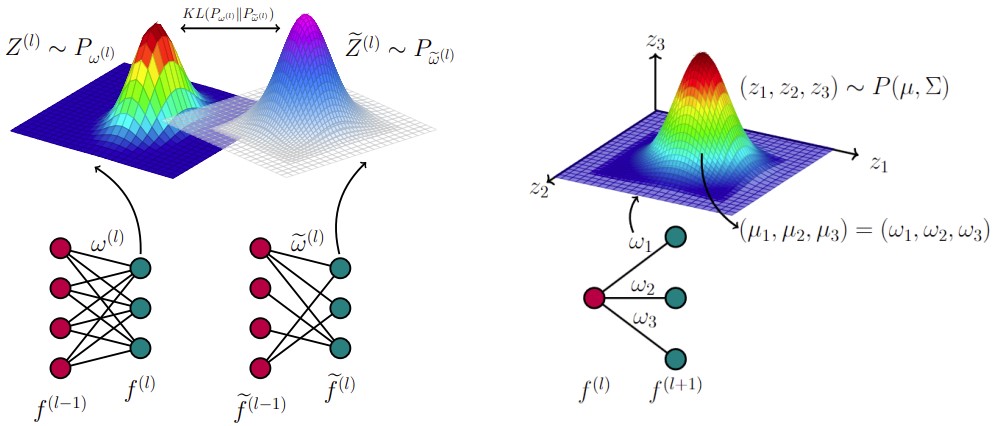

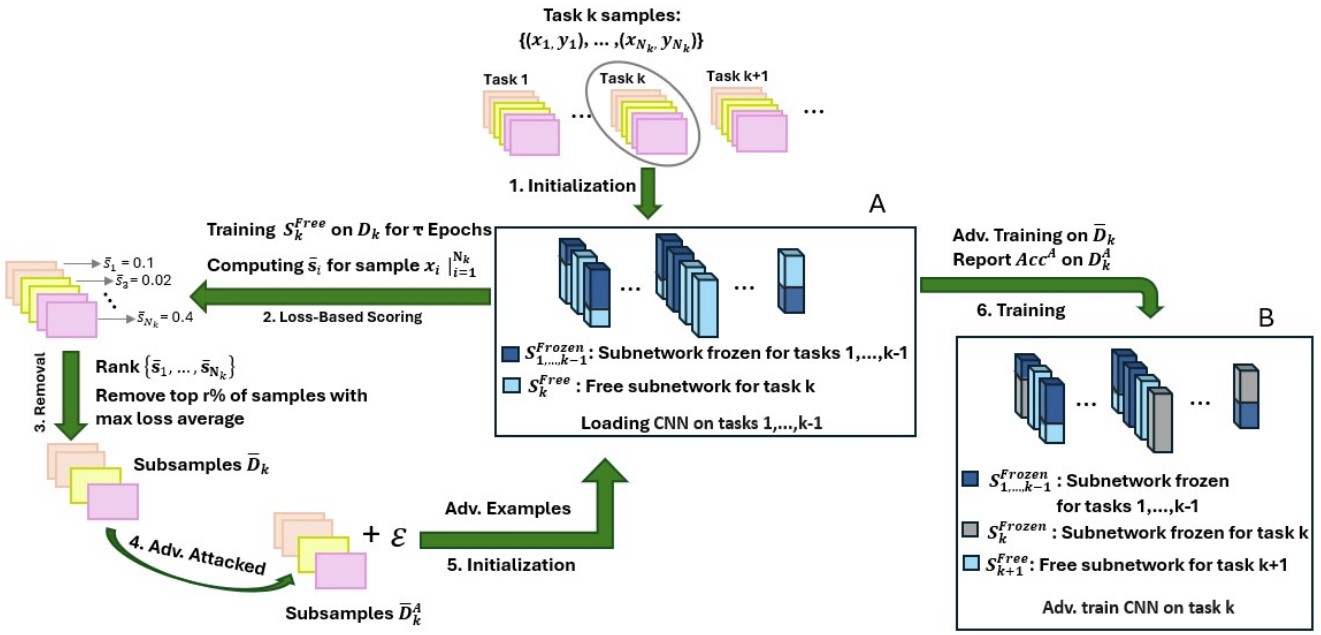

We investigate using synthetic data (GANs, Diffusion) to replace natural samples in continual task learning. Our EpochLoss strategy removes uninformative examples, enhancing adversarial robustness and model generalization.